Google Reconnaissance, Sprinter-style

When doing security assessments or penetration tests, there’s a significant amount of findings that you can get from search engines. For instance, if a client has sensitive information or any number of common vulnerabilities, you can often find those with a Google or Bing search, without sending a single packet to the client’s infrastructure.

This concept is called “google dorking”, and was pioneered by Johnny Long back in the day (he has since moved on to other projects – see http://www.hackersforcharity.org ).

In a few recent engagements, we actually found password hashes in a “passwd” file, and passwords in “passwords.txt” is a somewhat common find as well.

Search terms: inurl:www.customer.com passwords

Or inurl:www.customer.com passwd

Excel documents (always a great target) can be found with a simple:

Inurl:www.customer.com ext:xls

Or configuration files:

Ext:cfg

Or ext:conf

Or something you may not have though of - security cameras. Folks are stampeding to put their security cameras online, and guess how much effort they put into securing them (usually less than none). Not only do you have security footage if you gain access to one of these, they're usually running older/unpatched linux distributions, so in a penetration test they make great toe-hold hosts to pivot into the inside network.

To find JVC Web Cameras:

intext:"Welcome to the Web V.Networks" intitle:"V.Networks [Top]" -filetype:htm

Finding things like webcams is sometimes easier on Bing, they’ve got an “ip:” search term, so you can find things that are indexed but aren’t hosted on a site with a domain name.

… You get the idea. After you total everything up, there’s several thousand things you can search for that you (or your customer) should be concerned about that you can find just with a search engine.

With several thousand things to check, there’s no doing this manually. In past projects, I wrote some simple batch files to do this, with a 2 or 3 minute wait between them to help evade google saying “looks like a hacker search bot to me” – when they do that, they pop up a captcha. If you don’t answer the captcha, you’re “on hold” for some period of time before you can resume.

However, in my latest project, I’ve seen that Google especially has a much more sensitive trigger to this kind of activity, to the point that it’s a real challenge to get a full “run” of searches done. This can be a real problem – often what you find in reconnaissance can be very useful in subsequent phases of a pentest or assessment. For instance, recon will often tell you if a site has a login page, but a simple authentication bypass allows you to get to the entire site if you go to the pages individually. This can save you a *boatload* of effort, or find things you never would have seen otherwise. Leveraging search engines will also sometimes find your customer’s information on sites that aren’t their sites. These are generally out of scope for any “active” pentest activities, but the fact that the data is found elsewhere is often a very valuable finding.

So, with a typical “dork” run taking in excess of 3 days, what to do? On one hand, you can simply change search engines. For example Baidu (a popular china-based search engine) doesn’t appear to check for this sort of “dork” activity. In the words of John Strand – “baidu is the honey badger of search engines – they just don’t care”. While you might get the same results though, using a china – based search engine isn’t confidence-inspiring to some customers.

The path I took was to use the Google Search API (Bing offers a similar service). You can sign up for the API at the Google Developers Console, found here:

https://console.developers.google.com/project?authuser=0

The Bing equivalent is here:

https://www.bing.com/developers/appids.aspx

Now, with an API key you can simply plug that key in to the tool of choice (I often use either GoogleDiggity or Recon-NG, but you can write your own easily enough), and you are good for thousands of searches per day! An entire run that might have taken 3 days using a traditional “scraping” approach can now be completed in about 20 minutes.

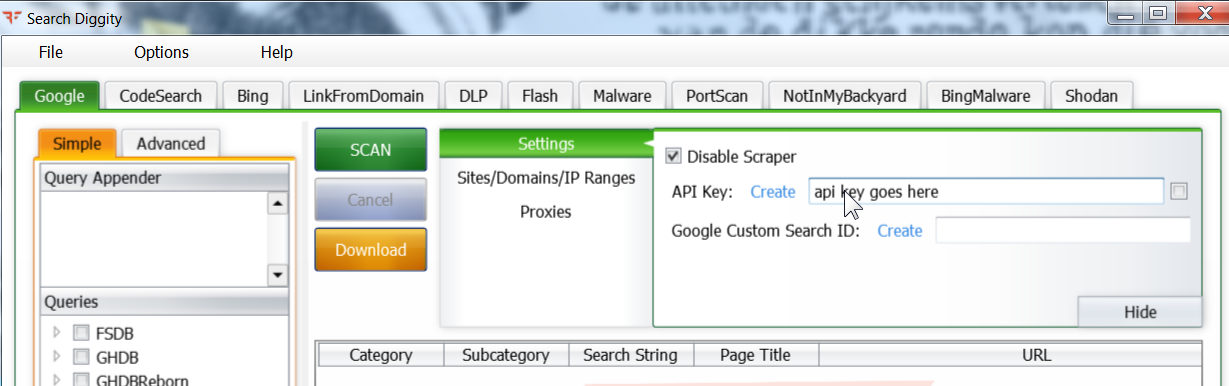

So for instance, to configure GoogleDiggity, disable scraping and insert your search API Key and Search as shown here:

They've got a helpful "create" pick if you don't have a search application of your own set up yet.

Lastly, on your Google CSE setup page (https://cse.google.com/cse/setup ), open the “Basic” tab, add the domains of interest for your client in the “Sites to Search” section, then change the scope from “Search Only Included sites” to “Search the entire web but emphasize included sites”. This will allow you to find things like sensitive customer information stored on sites *other* than the ones in your list.

You can expand on this approach with API Keys for other recon engines as well - Shodan would be a good next logical step, their API key subscription options are here:

https://developer.shodan.io/billing

Please use our comment form and share what APIs or tools you've used for reconnaissance. If your NDA permits, feel free to share some of the more interesting things you've found also!

===============

Rob VandenBrink

Comments

<a href="https://kartalojistik.com " rel="nofollow ugc">kartal nakliyat</a>

kartalojistik

Apr 4th 2023

2 years ago

https://kartalojistik.com kartal nakliyat

kartalojistik

Apr 4th 2023

2 years ago