Be Careful What you Wish For!

I was working on an ESX upgrade project for a client last week, and had an incident (lower case "i") that I thought might be interesting to our readers.

I had just finished ugrading the vCenter server (vCenter is the management application for vSphere environments), everything looked good, and I was on my way home. That's when it happened - I got "the call". If you're a consultant, or have employed a consultant, you know the call I'm talking about. "The vCenter server seems to be *really* slow" my client said, "just since you upgraded it". Oh Darn! I said to myself (ok, maybe I didn't say exactly that, but you get my drift). I re-checked they hardware requirements for 5.1 as compared to 4.1, and the VM I had this on seemed to be OK on that front, and after a quick check the CPU and memory also looked fine. OK, over to the event log we go.

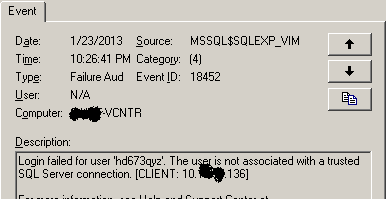

Ah-ha! What's this?

Now don't that look suspicious? Who would try logging into SQL with a userid like "hd673qyz"? A brute force bit of malware maybe? And after a quick check, the IP in question was still live on the network, but doesn't resolve on my client's internal DNS server. So that means it's not a server, and it's not a client in Active Directory - this thing is not "one of ours" as they say. Now things are getting interesting!

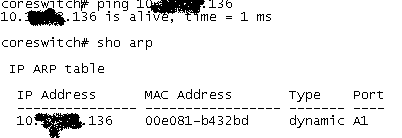

Let's dig just a bit more, this time on the switching infrastructure - getting the MAC address and identifying the switch port it's on:

At this point, I call my client back, and ask if he might know what this offending device is, and if he maybe wants to shut that switch port down until he can deal with it.

This brings some new information into the mix - he asks me "I wonder if that's our XYZ scanner?" (insert a name-brand security scanner here, often used for PCI scans). This question was followed immediately by a face-palm moment - because the scanner - with it's logo prominently displayed - had been on the desk beside me the entire day while I was there! He was doing the absolute right thing, something I wish more folks did, he was scanning both his internal and perimeter network periodically for changes and security issues.

Sometimes, the change you just made isn't the cause of the problem that's just come up. OK, usually the problem is related to your change, but sometimes, as they say, "coincidences happen" - ok, maybe they don't say *exactly* that, but it's close. When we're engaged to do penetration tests and security scans, we always caution clients that the act of scanning can cause performance issues and service interruptions. But when you run your own scans internally, just keep in mind that this caveat is still in play. It's very easy to DOS internal services by changing one tick-box in your scanner.

In the end, this incident had a positive outcome. We'll be changing the Windows Firewall settings on the vCenter server, restricting SQL access to local access only (the vCenter server itself), denying network access to SQL. Because there's no reason at all to offer up SQL services to everyone on the network if only local services need it. We likely would have gotten there anyway, the vSphere Hardening Guide calls this out, in the guideline "restrict-network-access". This doesn't specifically mention the SQL ports, but the hardening Guide does recommend using the host firewall on the vCenter Server to block ports that don't need a network presence.

After all was said and done, I find my taste for irony isn't what it used to be .. when clients take your advice (in this case, scheduled scans of the internal network), you don't have to look far when it comes back to bite you !

If you've had a success story, where you've implemented a scheduled scanning process and found an unexpected issue that needed a resolution, please let us know in our comment form. Alternatively, if you've accidentally DOS'd a production service, that also makes a great comment!

===============

Rob VandenBrink

Metafore

Comments

Peter

Jan 29th 2013

1 decade ago

Carl

Jan 29th 2013

1 decade ago

Stephane

Jan 29th 2013

1 decade ago

Orv

Jan 29th 2013

1 decade ago

Joshua

Jan 29th 2013

1 decade ago

Of course that this wasn't a planned "change", just a small test from my part to test the vulnerability scanner. Result - the email service was down for the whole day.

The discovered the problem, and thought that the best idea would be a CAPTCHA. But... taking this responsibility was too much for some people.

So.. here I was. After a year I tried again an web application vulnerability scanner (don't remember which one). Result -> no emails for the whole day, because they didn't fixed the problem.

Things happens :)

Luc

Jan 30th 2013

1 decade ago