Evolution of Artificial Intelligence Systems and Ensuring Trustworthiness

We live in a dynamic age, especially with the increasing awareness and popularity of Artificial Intelligence (AI) systems being explored by users and organizations alike. I was recently quizzed by a junior researcher on how AI systems came about and realized I could not answer that query immediately. I had a rough idea of what led to the current generative and large language models. Still, I had a very fuzzy understanding of what transpired before them, besides being confident that neural networks were involved. Unsatisfied with the lack of appreciation of how AI systems evolved, I decided to explore how AI systems were conceptualized and developed to the current state, sharing what I learnt in this diary. However, knowing only how to use them but being unable to ensure their trustworthiness (especially if organizations want to use these systems for increasingly critical business activities) could expose organizations to a much higher risk than what senior leadership could accept. As such, I will also suggest some approaches (technical, governance, and philosophical) to ensure the trustworthiness of these AI systems.

AI systems were not built overnight, and the forebears of computer science and AI had thought at length about how to create a system almost similar to a human brain. Keeping in mind the usual considerations, such as the Turing test and cognitive and rational approaches, there were eight foundational disciplines that AI drew on [1]. This is illustrated in Figure 1 below:

Figure 1: Foundational Disciplines Used in AI Systems

The foundational disciplines also yielded their own set of considerations which would collectively be considered for an AI system. They are summarized in Table 1 below [1]:

| Foundational Discipline | Considerations |

| Philosophy | - Where does knowledge come from? - Can formal rules be used to draw valid conclusions? - How does the mind arise from a physical brain? - How does knowledge lead to action? |

| Mathematics | - What can be computed? - How do we reason with uncertain information? - What are the formal rules to draw valid conclusions? |

| Economics | - How should we make decisions in accordance with our preferences? - How should we do this when others may not go along? - How should we do this when the payoff may be far in the future? |

| Neuroscience | - How do brains process information? |

| Psychology | - How do humans and animals think and act? |

| Computer Engineering | - How can an efficient computer be built? |

| Control theory and cybernetics | - How can artifacts operate under their own control? |

| Linguistics | - How does language relate to thought? |

For brevity’s sake, I will skip stating the overall historical details of the exact developments of AI. However, an acknowledgement to the first work of AI should be minimally mentioned, which was a research on artificial neurons by Warren McCulloch and Walter Pitts in 1943 [1]. As technological research on AI progressed, distinct iterations of AI systems emerged. These are listed in Table 2 below in chronological order, along with some salient pointers and the advantages/disadvantages (where applicable):

| Classification of AI Systems | Details |

| Problem-solving (Symbolic) Systems | - 1950s to 1980s era - Symbolic and declarative knowledge representations - Logic-based reasoning (e.g. propositional/predicate/higher-order logic) - Rule-based (specifying how to derive new knowledge or perform certain tasks based on input data and the current state of the system) - Struggled with handling uncertainty and real-world complexities |

| Knowledge, reasoning, planning (Expert) Systems | - 1970s to 1980s era - Knowledge base (typically represented in a structured form, such as rules, facts, procedures, heuristics, or ontologies) - Inference engine (applies logical rules, inference mechanisms, and reasoning algorithms to derive conclusions, make inferences, and solve problems based on the available knowledge) - Rule-based reasoning [define conditions (antecedents) and actions (consequents), specifying how to make decisions or perform tasks based on input data and the current state of the system. The inference engine evaluates rules and triggers appropriate actions based on the conditions met.] - Limited by static knowledge representations |

| Machine Learning (ML) Systems | - 1980s to 2000s era - Feature extraction and engineering (data features/attributes extracted and transformed/combined) - Model selection and evaluation (e.g. linear regression, decision trees, support vector machines, neural networks, and ensemble methods) - Generalization (Ability of a model to accurately perform on unseen data) - Hyperparameter tuning (Tuning parameters that control the learning process and model complexity. Techniques such as grid search, random search, and Bayesian optimization are commonly used for hyperparameter tuning) - Had performance issues due to compute power, but performance significantly improved in early 21st century due to advances in compute power |

| Deep Learning Systems | - 2000s to present - Subset of ML systems (uses neural networks with multiple layers) - Feature hierarchies and abstractions (Lower layers in the network learn low-level features such as edges and textures, while higher layers learn more abstract concepts and representations, such as object parts or semantic concepts) - Scalability (particularly well-suited for tasks such as image and speech recognition, natural language processing, and other applications with massive datasets) |

| Generative Models [Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs)] | - 2010s to present - Data generation (produce new data samples based on training data. Samples can be images, text, audio, or any other type of data that the model is trained on) - Probability distribution modelling (capture the statistical dependencies and correlations between different features or components of training data and generate new samples exhibiting similar properties to the training data) - Variability and diversity (multiple plausible samples for a given input or condition can be generated by sampling from the learned probability distribution) - Unsupervised learning (unlabelled or partially labelled training data for the model to capture inherent structure and patterns within the data) |

| Transfer Learning / Large Language Models | - 2010s to present - Reuse of pre-trained models (pre-trained models are fine-tuned or adapted to new tasks with limited labelled data) - Domain adaptation (e.g. pre-trained model trained on general text data can be fine-tuned for specific domains such as legal documents, medical texts, or social media post) - Fine-grained representations (encode information about word semantics, syntax, grammar, sentiment, and topic coherence, enabling them to capture diverse aspects of language) - Multi-task approach (enables the model to learn more generalized representations of text data, improving performance on downstream tasks) |

With reference to Table 2, we see that AI systems have evolved significantly to become plausible assistants in automating and augmenting work processes. One must be mindful of ensuring these new systems are trustworthy and remain so due to the potential complications that could occur. AI systems could be exposed to traditional cybersecurity issues such as unauthorized access, unsecured credentials, backdooring (e.g. supply-chain compromise) and data exfiltration. They also have their own domain-specific risks, such as poisoned training datasets, insufficient guardrails, erosion of model integrity and prompt engineering. While technical controls may mitigate some of these risks, governance and philosophical approaches could bolster the resiliency and trustworthiness of the incumbent AI systems. I will briefly discuss the technical, governance and philosophical approaches that AI users should be aware of.

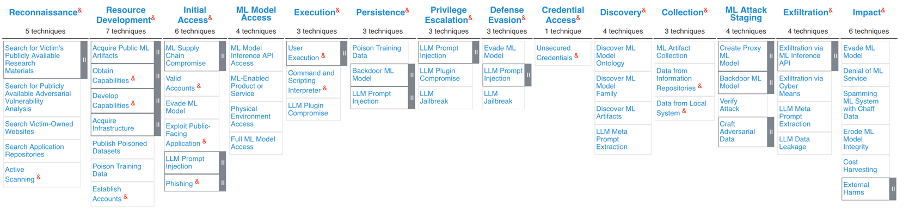

Firstly, from a technical perspective, it is always a good approach to model potential threats and perform a security assessment of the AI model to be deployed. An excellent guidance for such an approach would be the MITRE Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) matrix (with reference to Figure 2 below) [2]. Appropriate mitigations to the applicable techniques to an incumbent AI system could be derived by referencing the ATLAS matrix. At the same time, assessors could rely on a globally accepted framework to guide their security assessments.

Figure 2: MITRE Adversarial Threat Landscape for Artificial-intelligence Systems (ATLAS)

From a governance perspective, the Control Objectives for Information Technologies (COBIT) (2019) could offer guidance when users are faced with a requirement for auditing AI systems. There are risks when deploying AI systems, such as a lack of alignment between IT and business needs, improper translation of IT tactical plans from IT strategic plans and ineffective governance structures to ensure accountability and responsibilities associated with the AI function [3]. For example, COBIT’s DSS06 Manage Business Process Control includes management practice DSS06.05 Ensure traceability and accountability. DSS06.05 could be utilized to ensure AI activity audit trails provide sufficient information to understand the rationale of AI decisions made within the organization [3].

Finally, there is the philosophical perspective for AI systems. As various organizations and users adopt AI, an AI ecosystem will inevitably form (for better or for worse). Since the modern AI ecosystem is in its infancy, governance of AI systems requires global consensus. A notable example was the mapping and interoperability of national AI governance frameworks between Singapore and United States through the Infocomm Media Development Authority (IMDA) and US National Institute of Science and Technology (NIST) crosswalk [4]. Additionally, the AI Verify Foundation and IMDA further proposed a model AI governance framework for Generative AI to address the apprehension and concerns towards AI [5]. Users and organizations looking into implementing AI should consider the nine dimensions raised in the proposed framework. These are also summarized in Table 3 below [5]:

| Dimensions | Details |

| Accountability | Putting in place the right incentive structure for different players in the AI system development life cycle to be responsible to end-users |

| Data | Ensuring data quality and addressing potentially contentious training data in a pragmatic way, as data is core to model development |

| Trusted Development and Deployment | Enhancing transparency around baseline safety and hygiene measures based on industry best practices, in development, evaluation and disclosure |

| Incident Reporting | Implementing an incident management system for timely notification, remediation, and continuous improvements, as no AI system is foolproof |

| Testing and Assurance | Providing external validation and added trust through third-party testing, and developing common AI testing standards for consistency |

| Security | Addressing new threat vectors that arise through generative AI models |

| Content Provenance | Transparency about where content comes from as useful signals for end-users |

| Safety and Alignment R&D | Accelerating R&D through global cooperation among AI Safety Institutes to improve model alignment with human intention and values |

| AI for Public Good | Responsible AI includes harnessing AI to benefit the public by democratizing access, improving public sector adoption, upskilling workers and developing AI systems sustainably |

I hope this primer on AI helped to get everyone up to speed on how AI systems evolved over the years and appreciate the vast potential these systems bring. However, we live in turbulent times where AI systems could be abused and compromised. I also suggested potential avenues (technical, governance, and philosophical) for AI systems to become more trustworthy despite adversarial tactics and techniques. We live in an exciting age, and seeing how far we can evolve by adopting AI systems will be a rewarding experience.

References:

1. Stuart Russell and Peter Norvig. 2020. Artificial Intelligence: A Modern Approach (4th. ed.). Pearson, USA.

2. https://atlas.mitre.org/matrices/ATLAS

3. ISACA. 2018. Auditing Artificial Intelligence. ISACA, USA.

4. https://www.imda.gov.sg/resources/press-releases-factsheets-and-speeches/press-releases/2024/public-consult-model-ai-governance-framework-genai

5. https://aiverifyfoundation.sg/downloads/Proposed_MGF_Gen_AI_2024.pdf

-----------

Yee Ching Tok, Ph.D., ISC Handler

Personal Site

Mastodon

Twitter

Comments