So where did those Satori attacks come from?

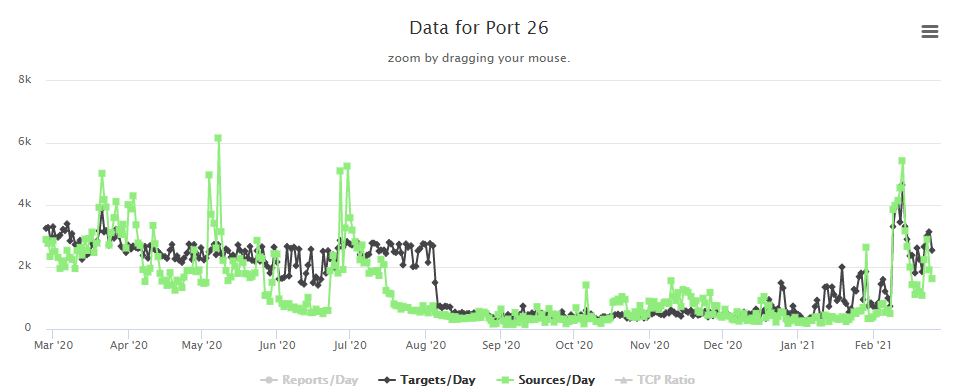

Last week I posted about a new Satori variant scanning on TCP port 26 that I was picking up in my honeypots. Things have slowed down a bit, but levels are still above where they had been since mid-July 2020 on port 26.

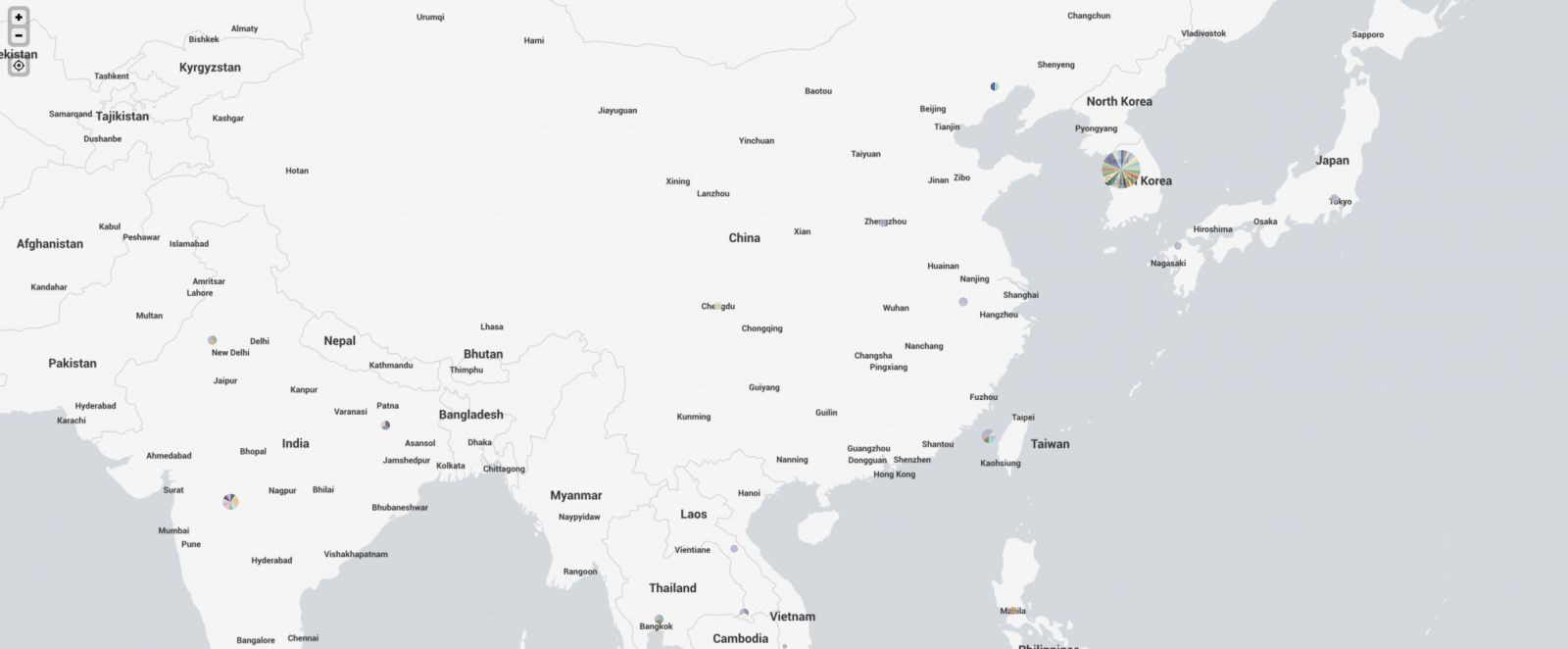

In some discussion afterward, a question that came up was where were the attacks coming from. My first thought was to take the IPs and run them through the Maxmind DB to geolocate them and map them. I first looked around to see if there was a Python script that would do the job using the Maxmind and Google Maps APIs. I didn't actually find what I was hoping for. I did find a few things that I can probably make work eventually (and if I have some time after I teach next week), perhaps I'll work more on that. In the meantime, Xavier threw the IPs in Splunk for me and got me some maps to show where the attacks were coming from. Now, it turns out that of the 384 attacks I recorded in 2 of my honeypots over the first 3 or 4 days of the spike, they came from 340 distinct IPs. The verdict is... most of them were coming from Korea. Here's what we got.

In some discussion afterward, a question that came up was where were the attacks coming from. My first thought was to take the IPs and run them through the Maxmind DB to geolocate them and map them. I first looked around to see if there was a Python script that would do the job using the Maxmind and Google Maps APIs. I didn't actually find what I was hoping for. I did find a few things that I can probably make work eventually (and if I have some time after I teach next week), perhaps I'll work more on that. In the meantime, Xavier threw the IPs in Splunk for me and got me some maps to show where the attacks were coming from. Now, it turns out that of the 384 attacks I recorded in 2 of my honeypots over the first 3 or 4 days of the spike, they came from 340 distinct IPs. The verdict is... most of them were coming from Korea. Here's what we got.

And if I zoom in on Asia, we see this

And if I zoom in on Asia, we see this

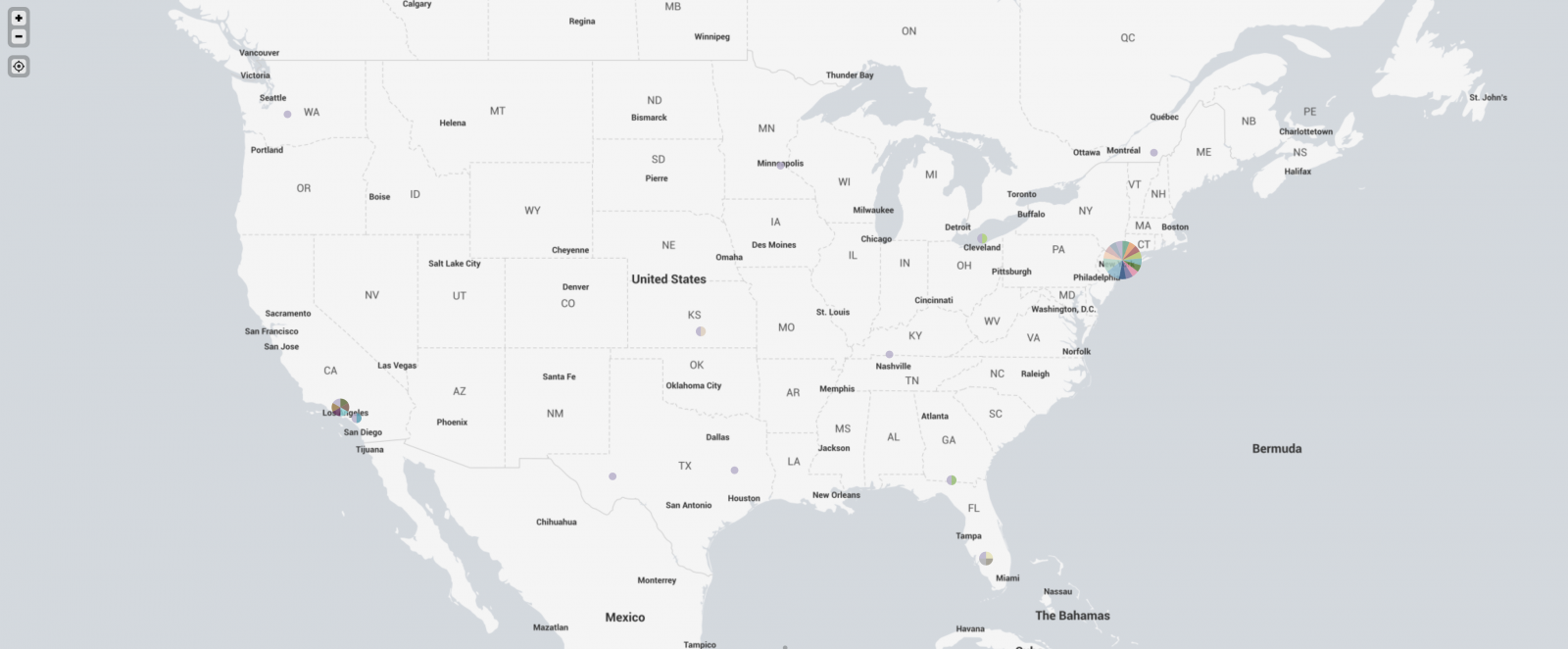

And, finally, I took a look at the US.

And, finally, I took a look at the US.

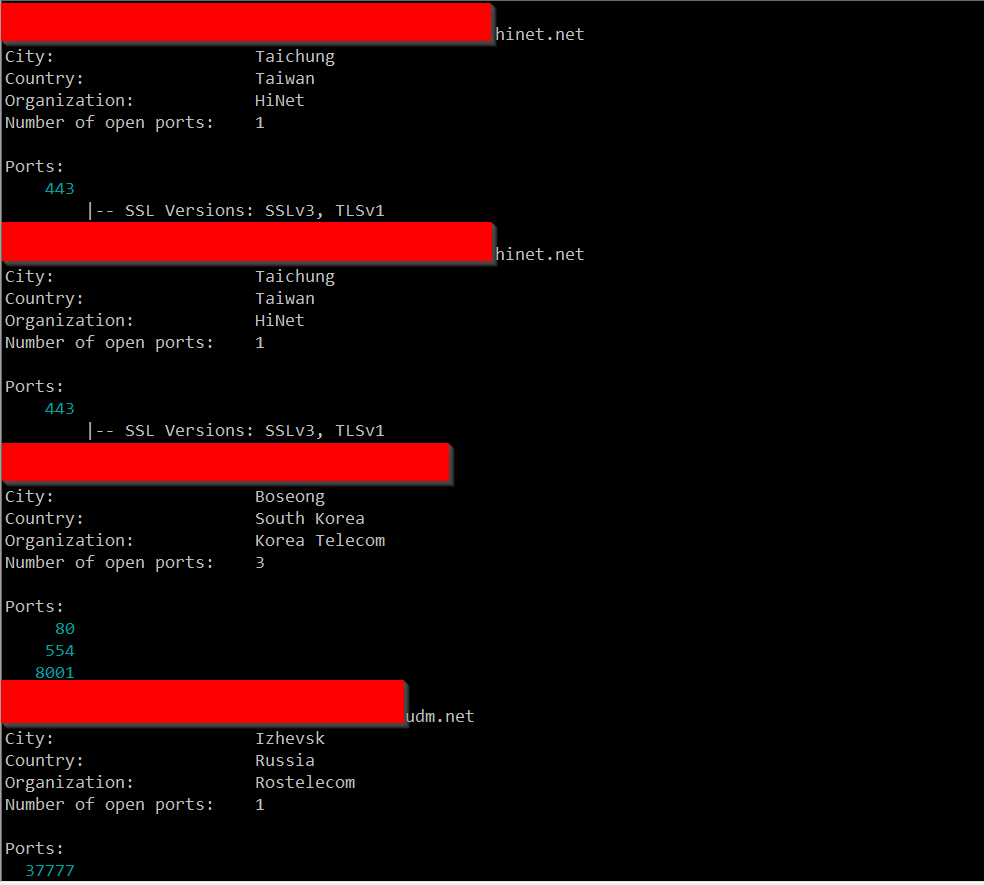

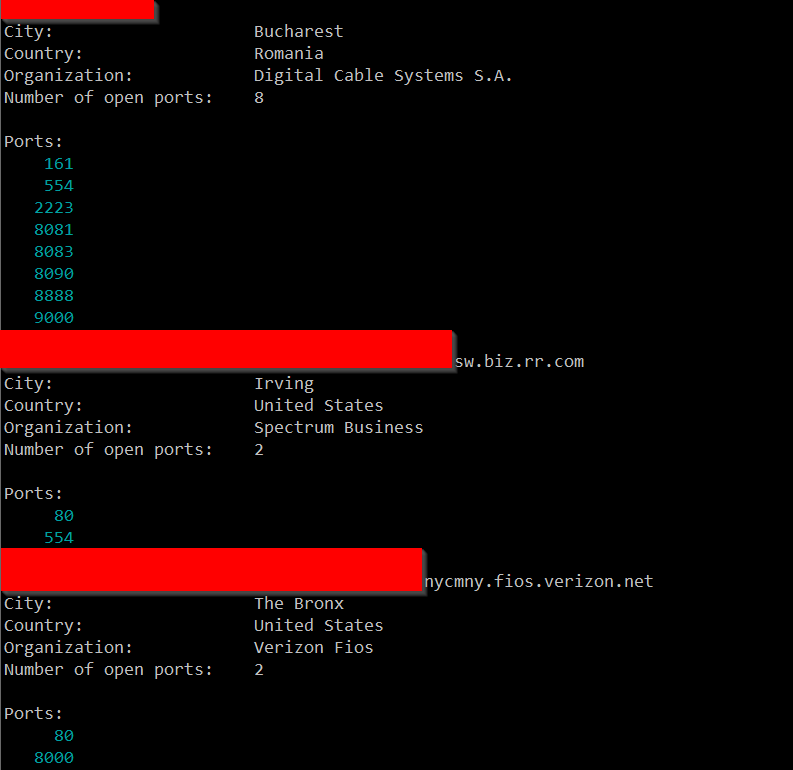

So, I'm not sure exactly what to make of all of this. I ran a few of these IPs through Shodan and didn't come up with anything in particular that they seemed to have in common, but maybe I didn't run enough of them.

So, I'm not sure exactly what to make of all of this. I ran a few of these IPs through Shodan and didn't come up with anything in particular that they seemed to have in common, but maybe I didn't run enough of them.

If nothing else, this has given me some ideas of projects I need to work on when I have some free time. If anyone has any additional insight, I welcome your comments below or via e-mail or our contact page.

---------------

Jim Clausing, GIAC GSE #26

jclausing --at-- isc [dot] sans (dot) edu

Forensicating Azure VMs

With more and more workloads migrating to "the Cloud", we see post-breach forensic investigations also increasingly moving from on-premises to remote instances. If we are lucky and the installation is well engineered, we will encounter a "managed" virtual machine setup, where a forensic agent or EDR (endpoint detection & response) product is pre-installed on our affected VM. Alas, in my experience, this so far seems to be the exception rather than the norm. It almost feels like some lessons learned in the past two decades about EDR have been thrown out again, just because ... "Cloud".

With more and more workloads migrating to "the Cloud", we see post-breach forensic investigations also increasingly moving from on-premises to remote instances. If we are lucky and the installation is well engineered, we will encounter a "managed" virtual machine setup, where a forensic agent or EDR (endpoint detection & response) product is pre-installed on our affected VM. Alas, in my experience, this so far seems to be the exception rather than the norm. It almost feels like some lessons learned in the past two decades about EDR have been thrown out again, just because ... "Cloud".

If you find yourself in such a situation, like I recently did, here is a throwback to the forensics methodology from two decades ago: Creating a disk image, and getting a forensic time line off an affected computer. That the computer is a VM in the Cloud makes things marginally easier, but with modern disk sizes approaching terabytes, disk image timelining is neither elegant nor quick. But it still works.

Lets say we have a VM that has been hacked. In my example, for demonstration purposes, I custom-created a VM named "whacked" in an Azure resource group named "whacked". The subscription IDs and resource IDs below have been obfuscated to protect the not-so-innocent Community College where this engagement occurred.

If you have the Azure CLI installed, and have the necessary privileges, you can use command line / powershell commands do forensicate. I recommend this over Azure GUI, because it allows you to keep a precise log of what exactly you were doing.

First, find out which OS disk your affected VM is using:

powershell> $vm=az vm show --name whacked --resource-group whacked | ConvertFrom-JSON

powershell> $vm.storageProfile.osDisk.managedDisk.id

/subscriptions/366[...]42/resourceGroups/whacked/providers/Microsoft.Compute/disks/whacked_disk1_66a[...]7

Then, get more info about that OS disk. This will show for example the size of the OS disk, when it was created, which OS it uses, etc

powershell> $disk=az disk show --ids $vm.storageProfile.osDisk.managedDisk.id

Create a snapshot of the affected disk. Nicely enough, this can be done while the VM is running. All you need is "Contributor" or "Owner" rights on the resource group or subscription where the affected VM is located

powershell> $snap = az snapshot create --resource-group whacked --name whacked-snapshot-2021FEB20 --source "/subscriptions/366[...]42/resourceGroups/whacked/providers/Microsoft.Compute/disks/whacked_disk1_66a[...]7" --location centralus | ConvertFrom-JSON

Take note of the "location" parameter, it has to match the location of the disk, otherwise you'll get an obscure and unhelpful error, like "disk not found".

powershell> $snap.id

/subscriptions/366[...]42/resourceGroups/whacked/providers/Microsoft.Compute/snapshots/whacked-snapshot-2021FEB20

Next step, we create a temporary access signature to this snapshot.

powershell> $sas=(az snapshot grant-access --duration-in-seconds 7200 --resource-group whacked --name whacked-snapshot-2021FEB20 --access-level read --query [accessSas] -o tsv)

This allows us to copy the snapshot out of the affected subscription and resource, to a storage account that we control and maintain for forensic purposes:

powershell> az storage blob copy start --destination-blob whacked-snapshot-2021FEB20 --destination-container images --account-name [removed] --auth-mode login --source-uri "`"$sas`""

Take note of the "`"$sas`"" quotation... this is not mentioned in the Microsoft Docs anywhere, as far as I can tell. But the SAS access signature contains characters like "&" which are interpreted by Powershell as commands, so unless you use this exact way of double-quoting the string, the command will never work, and the resulting error messages will be extremely unhelpful.

The "Account-Name" that I removed is the name of your forensics Azure Storage Account where you have a container named "images". The copy operation itself is asynchronous, and is gonna take a while. You can check the status by using "az storage blob show", like this:

powershell> (az storage blob show -c images --account-name forensicimage -n whacked-snapshot-2021FEB20 --auth-mode login | ConvertFrom-JSON).properties.copy.progress

943718400/136367309312

powershell> (az storage blob show -c images --account-name forensicimage -n whacked-snapshot-2021FEB20 --auth-mode login | ConvertFrom-JSON).properties.copy.progress

136367309312/136367309312

Once both numbers match, all bytes have been copied. In our case, the disk was ~127GB.

Next step, create a new disk from the image. Make sure to pick a --size-gb that is bigger than your image:

powershell> az disk create --resource-group forensicdemo --name whacked-image --sku 'Standard_LRS' --location 'centralus' --size-gb 150 --source "`"https://[removed].blob.core.windows.net/images/whacked-snapshot-2021FEB20`""

Then, attach this new disk into a SANS SIFT VM that you have running in Azure for the purpose. In my example, the VM is called "sift" and sits in the resource group "forensicdemo":

powershell> $diskid=$(az disk show -g forensicdemo -n whacked-image --query 'id' -o tsv)

powershell> az vm disk attach -g forensicdemo --vm-name sift --name $diskid

Once this completes, you can log in to the SIFT VM, and mount the snapshot:

root@siftworkstation:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 4G 0 disk

sda1 8:1 0 4G 0 part /mnt

sdb 8:16 0 30G 0 disk

sdb1 8:17 0 29.9G 0 part /

sdb14 8:30 0 4M 0 part

sdb15 8:31 0 106M 0 part /boot/efi

sdc 8:32 0 64G 0 disk /plaso

sdd 8:48 0 150G 0 disk

sdd1 8:49 0 500M 0 part

sdd2 8:50 0 126.5G 0 part

sr0 11:0 1 628K 0 rom

root@siftworkstation:~#

Looks like our image ended up getting linked as "sdd2". Let's mount it

root@siftworkstation:/plaso# mkdir /forensics

root@siftworkstation:/plaso# mount -oro /dev/sdd2 /forensics/

root@siftworkstation:/plaso# ls -al /forensics/Windows/System32/cmd.exe

-rwxrwxrwx 2 root root 289792 Feb 5 01:16 /forensics/Windows/System32/cmd.exe

root@siftworkstation:/plaso# sha1sum /forensics/Windows/System32/cmd.exe

f1efb0fddc156e4c61c5f78a54700e4e7984d55d /forensics/Windows/System32/cmd.exe

Once there, you can run Plaso / log2timeline.py, or forensicate the disk image in any other way desired. If live forensics is more your thing, you can also re-create a VM from the snapshot image (az vm create --attach-os-disk..., with --admin-password and --admin-username parameters to reset the built-in credentials), and then log into it. Of course doing so alters any ephemeral evidence, because you actually boot from the affected disk. But if there is something that you can analyze faster "live", go for it. After all, you still have the original image in the Azure Storage Account, so you can repeat this step as often as necessary until you got what you need.

If the VM was encrypted with a custom key, there is an additional hurdle. In this case, $disk.EncryptionSettingsCollection will be "not null", and you additionally need access to the affected subscription's Azure Key Vault, to retrieve the BEK and KEK values of the encrypted disk. If this is the case in your environment, I recommend to take a look at the Microsoft-provided Workbook https://docs.microsoft.com/en-us/azure/architecture/example-scenario/forensics/ for Azure forensics, which mostly encompasses the commands listed above, but also supports private key encrypted VM disks.

Comments